Mapping emotions to music

with Sara Burns and Galen Chuang

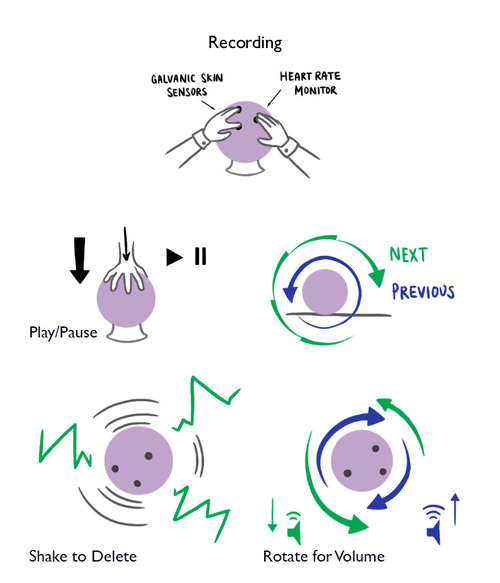

I was in charge of:

-creating the visual materials

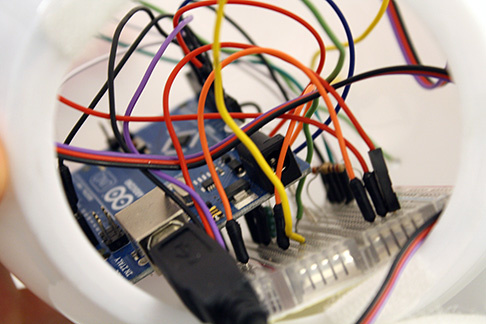

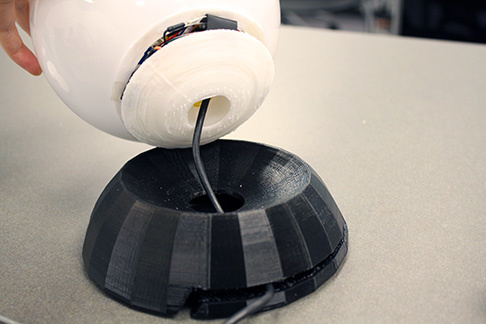

-3D modelling and physically engineering the prototype

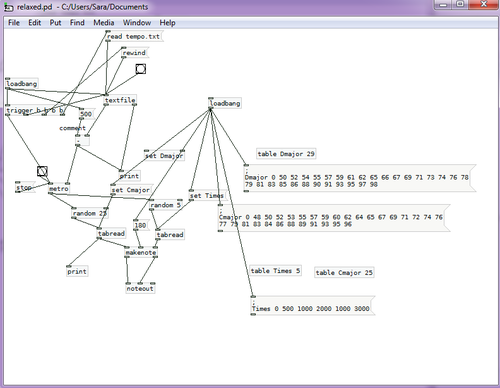

-coding the volume control interactions

EmotiSphere on Vimeo.